Why Zapper Edge Is Redefining Large File Transfers for the Enterprise

Is large-scale file transfer still an operational bottleneck? Zapper Edge brings cloud-native scale, secure orchestration, and cost-intelligent routing to set a new benchmark for performance and reliability.

HIGH PERFORMANCE

10/12/20252 min read

This article is part of Zapper Edge’s Zero Trust and AI-Ready Managed File Transfer knowledge series for regulated enterprises.

Introduction

Moving large files—often tens or hundreds of gigabytes—is no longer an exception. It is a daily operational reality across healthcare, media, logistics, financial services, and research-driven enterprises.

Yet most enterprise file transfer platforms remain rooted in legacy design: heavy, expensive, and operationally rigid. They rely on permanently running infrastructure sized for peak load, introduce multiple processing hops, and struggle to scale efficiently when demand fluctuates.

The result is predictable: high cost, underutilized capacity, and performance that degrades exactly when business pressure is highest.

Zapper Edge was built to change this model fundamentally.

The Traditional Approach: Rigid, Costly, and Inefficient

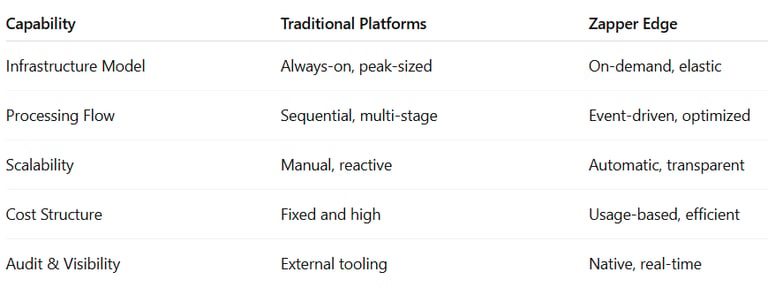

Conventional enterprise transfer platforms typically operate with three structural limitations:

Always-On Infrastructure

Systems are provisioned for worst-case volume and kept running continuously, regardless of actual utilization. This leads to persistent infrastructure spend, even during idle periods.

Multi-Stage Processing Pipelines

Large files are often staged, copied, scanned, and processed sequentially across multiple layers. Each handoff adds latency, complexity, and operational risk.

Fixed Cost, Variable Performance

Despite heavy investment, performance still degrades during spikes. Scaling usually requires manual intervention and further capital expense.

Zapper Edge’s Approach: Cloud-Native, Intelligent, and Elastic

Zapper Edge inverts the traditional model by designing file transfer as an event-driven, cloud-native service rather than a permanently running system.

Elastic by Design

Resources are provisioned only when transfers are active. When demand rises, capacity scales automatically. When workloads subside, infrastructure scales down—ensuring you pay only for what you consume.

Direct, Optimized Data Flow

Files move through streamlined pipelines without unnecessary staging or redundant copying, reducing latency and minimizing operational overhead.

Built-In Observability and Governance

Every transfer, access event, policy check, and security action is logged in real time. Audit trails and operational visibility are native capabilities, not afterthoughts.

A Practical Comparison

Business Impact

A mid-sized enterprise operating large daily data exchanges migrated from a legacy, over-provisioned file transfer stack to Zapper Edge.

The results were immediate:

Transfer completion times reduced significantly

No performance degradation during peak volumes

Infrastructure costs lowered by over 60%

Full operational and audit visibility without additional tooling

All achieved without re-architecting applications or managing new infrastructure.

Closing Perspective

Enterprise file transfer should no longer be a hidden bottleneck powered by idle servers and manual scaling.

Zapper Edge is engineered for modern data movement: elastic, secure, observable, and cost-intelligent by design.

Whether you are moving medical imaging, financial records, legal archives, or high-volume analytics data, Zapper Edge delivers the performance, governance, and resilience that today’s enterprises demand.

To see how Zapper Edge transforms large-scale file movement, Request for demo and write to us at: contactus@zapperedge.com

Zapper Edge LLC

1621, Central Avenue,

Cheyenne, WY 82001, USA

Write to Us : Contactus@zapperedge.com