Bringing Compute to Storage for Cloud AI Workflows

"Can compute-to-storage accelerate cloud AI? Zapper's in-place compute model shrinks data movement, speeds model iteration, and enforces governance—fueling scalable, secure AI workflows."

ARCHITECTURE AND SCALABILITYAI READY MFT

10/12/20251 min read

This article is part of Zapper Edge’s Zero Trust and AI-Ready Managed File Transfer knowledge series for regulated enterprises.

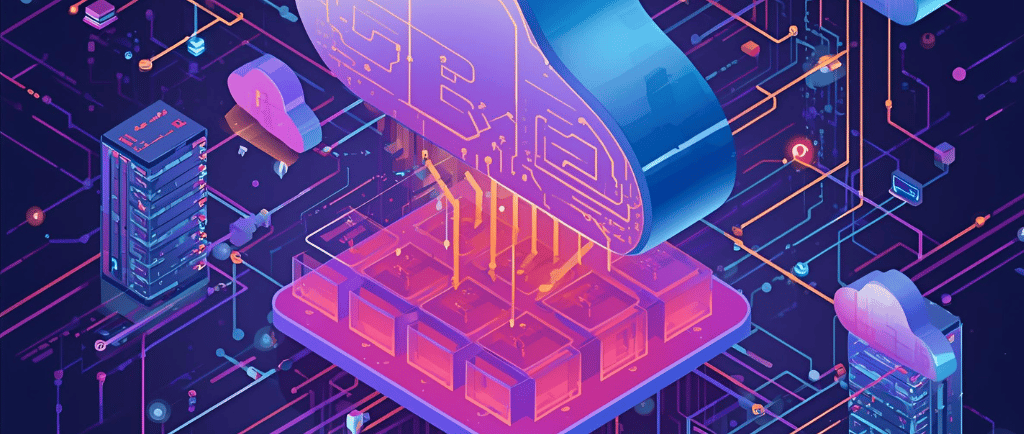

Traditional cloud architectures separate compute and storage, forcing data to move across networks for AI processing. This creates bottlenecks for data-intensive workflows like model training and inference. Prabhat Sharma explores a paradigm shift: bringing computation directly to storage layers (e.g., computational storage drives, smart NICs, or in-storage processing).

Key Insights:

Bandwidth & Latency Challenges: Moving petabytes of data between storage and compute clusters wastes time/resources, especially for distributed training.

Emerging Solutions:

Computational storage devices with embedded processors (e.g., FPGAs, ASICs) can preprocess, filter, or transform data locally.

Near-storage compute (e.g., embedding GPUs in storage servers) reduces data movement.

AI Workflow Benefits:

Faster data loading/augmentation during training.

Lower-latency inference by processing data at the edge.

Reduced egress costs and network congestion.

Feasibility Hurdles:

Hardware heterogeneity and software ecosystem fragmentation.

Re-architecting data pipelines for distributed processing.

Cost trade-offs of deploying specialized hardware.

Conclusion: While integrating compute and storage shows immense promise for optimizing AI workloads, its adoption hinges on mature tooling, standardized APIs, and careful cost-benefit analysis. Early experiments suggest significant performance gains, making this a trend to watch as cloud providers and hardware vendors innovate.

Zapper Edge LLC

1621, Central Avenue,

Cheyenne, WY 82001, USA

Write to Us : Contactus@zapperedge.com